- Published on

Database | Integrating Zilliz Vector Database as Knowledge Base in Dify

CAUTION

This article was translated from Chinese by an AI model and may contain inaccuracies. If you find issues, please refer to the original or suggest corrections.

Update_2025-04-15

- The database was built in late November 2024, and within less than a month, I received an email notification that Zilliz Cloud Pipelines would be discontinued... The documentation has been updated, but the new features are not yet available. The previous solution process is no longer applicable, and major vendors' vector databases still aren't very user-friendly (expensive).

- Zilliz Cloud Pipelines discontinuation notice: https://docs.zilliz.com.cn/docs/pipelines-concepts

Zilliz Cloud Pipelines service is being gradually phased out and will be discontinued by the end of Q2 2025, replaced by new "Data In, Data Out" features. These features aim to simplify the vectorization process in Milvus and Zilliz Cloud. Starting January 10, 2025, Zilliz Cloud Pipelines will no longer accept new user registrations. Existing users can continue using the service within the monthly 100 RMB free trial quota until the discontinuation date. The service does not provide SLA support. We recommend using embedding APIs from model providers or open-source models to generate vectors.

Problem

Issues being addressed: Small capacity (200 MB) of Dify Professional edition knowledge base and inaccurate recall accuracy for long text retrieval.

Tools tested and their issues

- Dify's suggested solution connecting to AWS Bedrock knowledge base has complex implementation.

Dify platform cannot directly connect to AWS Bedrock Knowledge Base yet. Development teams need to reference Dify's API documentation for external knowledge base connections to manually create backend API services and establish connections with AWS Bedrock.

- Vector databases Zilliz, Qdrant, Weaviate - the latter two have immature online services.

- Dify's suggested solution connecting to AWS Bedrock knowledge base has complex implementation.

Challenges encountered when choosing Zilliz vector database

- Problem texts need to be vectorized before searching in Zilliz database. Zilliz Pipeline was configured with bge-base-zh-v1.5, so to save costs, had to find online-callable bge-base-zh-v1.5...

- Major domestic vendors mostly only support online calls to their own text embedding models...

- HuggingFace Inference API directly supports vector model bge-large-zh-v1.5 (excluding bge-base-zh-v1.5), but http response may take >20s...

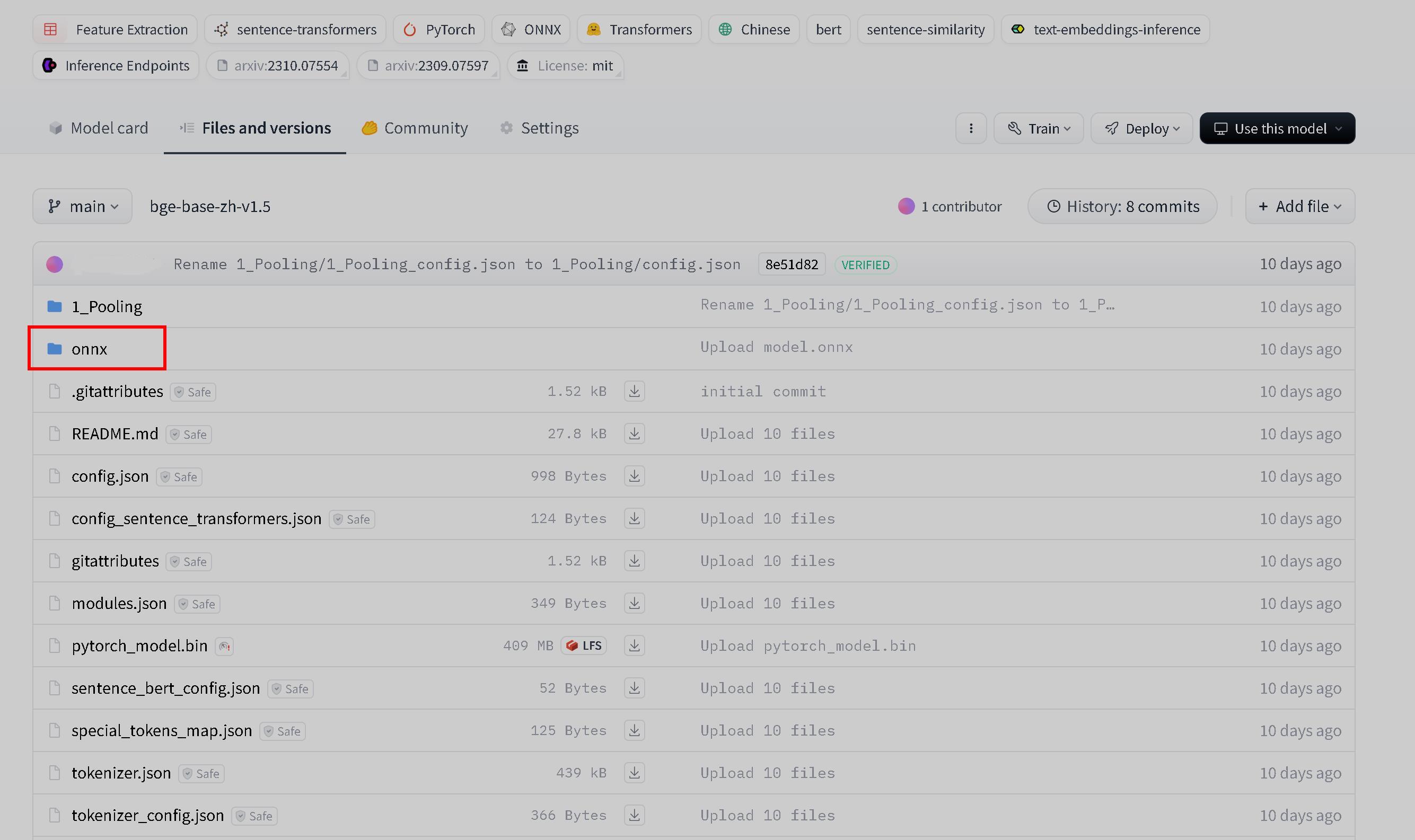

- HuggingFace Inference Endpoints allows custom online model configuration. Requires model.onnx file, which is a universal, cross-platform model representation format suitable for model exchange, deployment, and optimization Checked that bge-base-en-v1.5 has onnx, but bge-base-zh-v1.5 doesn't...

Solution Process

Download bge-base-zh-v1.5 locally, then configure model.onnx file

# Pythorch 框架 # 生成 bge-base-zh-v1.5 onnx 文件代码 import torch from transformers import BertModel, BertConfig import json # 读取配置文件 with open('bge-base-zh-v1.5/config.json', 'r') as f: config = json.load(f) # 创建 BertConfig 实例 bert_config = BertConfig(**config) # 确保 config 中的参数与 BertModel 兼容 bert_config.output_hidden_states = False # 如果不需要隐藏状态 bert_config.return_dict = True # 加载模型 model = BertModel(bert_config) # 加载模型权重(权重文件名为 'pytorch_model.bin') model_path = 'bge-base-zh-v1.5/pytorch_model.bin' model.load_state_dict(torch.load(model_path), strict=False) model.eval() # 创建一个虚拟输入 # 假设输入为 batch_size=1, sequence_length=128 # 这里的输入是 token ids 和 attention masks input_ids = torch.ones(1, 128, dtype=torch.long) attention_mask = torch.ones(1, 128, dtype=torch.long) # 导出模型为 ONNX output_onnx = 'BAAI_bge_base_zh_v1.5_model.onnx' torch.onnx.export(model, (input_ids, attention_mask), output_onnx, opset_version=14, do_constant_folding=True, input_names=['input_ids', 'attention_mask'], output_names=['last_hidden_state', 'pooler_output'], dynamic_axes={ 'input_ids': {0: 'batch_size', 1: 'sequence_length'}, 'attention_mask': {0: 'batch_size', 1: 'sequence_length'}, 'last_hidden_state': {0: 'batch_size', 1: 'sequence_length'}, 'pooler_output': {0: 'batch_size'} }) print(f"模型已导出为 ONNX 格式: {output_onnx}")Create a new model repository on HuggingFace, synchronize the configured model, then deploy via Deploy - Inference Endpoints

HuggingFace Inference Endpoints Use self-deployed online model to vectorize problem text, Feature Extraction: https://huggingface.co/docs/api-inference/tasks/feature-extraction

import requests API_URL = "https://<url>.endpoints.huggingface.cloud" headers = {"Authorization": "Bearer <token>"} def query(payload): response = requests.post(API_URL, headers=headers, json=payload) return response.json() output = query({ "inputs": "text", }) print(output)Perform hybrid search through Zilliz knowledge base: https://docs.zilliz.com.cn/reference/restful/hybrid-search-v2

# 此操作根据矢量相似性和标量筛选搜索实体,并使用指定的策略对结果进行重新排序 # metricType 当前搜索所使用的度量类型。该值应与目标 Collection 的度量类型相同 # annsField 向量值字段 # strategy unsupported rank type COSINE # limit 本次查询要求返回的最大 Entity 数量 # offset 本次查询结果中需要跳过的 Entity 数量 # limit 与 offset 的总和不能超过 16384 import requests # 混合检索请求的 url url = "https://<集群 ID>.serverless.ali-cn-hangzhou.cloud.zilliz.com.cn/v2/vectordb/entities/hybrid_search" headers = { "Authorization": "Bearer <token>", "Content-Type": "application/json" } payload = { "collectionName": "<collectionName>", "search":[ { "data": [ [ 768 embeddings ] ], "annsField": "<Vector_Field>", "limit": 5, "offset": 0, "outputFields": [ "*" ] } ], "rerank": { "strategy": "rrf", "params": { "k": 10 } ], "outputFields": [ "<Specified_Field>" ] } response = requests.post(url, headers=headers, json=payload) response_json = response.json() print(response.json())Locally generated text vectors can be used for Zilliz online vector retrieval

# 文本向量化用作查询词 # 768-bge-base-en-v1.5: https://huggingface.co/BAAI/bge-base-en-v1.5 # 768-bge-base-zh-v1.5: https://huggingface.co/BAAI/bge-base-zh-v1.5 # 1024-bge-large-zh-v1.5: https://huggingface.co/BAAI/bge-large-zh-v1.5 from transformers import AutoTokenizer, AutoModel import torch # 加载模型和分词器 model_name = "bge-base-zh-v1.5" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModel.from_pretrained(model_name) # 输入文本 text = "文本" # 对文本进行分词,并设置最大长度为 512 inputs = tokenizer(text, return_tensors="pt", padding=True, truncation=True, max_length=512) # 使用模型生成向量 with torch.no_grad(): outputs = model(**inputs) # 获取最后一层的隐藏状态(即向量表示) embeddings = outputs.last_hidden_state # 通常我们取第一个 token 的向量作为句子的向量表示 sentence_embedding = embeddings[:, 0, :].squeeze() # 确保向量维度 assert sentence_embedding.size(0) == 768, "向量维度不为 768" # 将张量转换为列表并输出 sentence_embedding_list = sentence_embedding.tolist() print(sentence_embedding_list)